How to Detect AI-Generated Content & What AI Writing Means for SEO (2024)

If you’re working with writers and worried about AI-generated content being submitted, this guide is for you. And if you’re a writer who has received feedback stating that your content has AI, but you’re sure that you submitted human-written text, you’ll find this guide valuable.

We’ve spent around six months working on improving the way we detect AI content, as well as fine-tuning our writing in order for our work to not sound like it’s AI-written content. Because even the most advanced AI detector is still a robot, and sometimes they get it wrong.

If you’d asked our senior editors if they could spot AI-generated content in January 2024, they would have confidently said yes.

But in a very short time, artificial intelligence has progressed, and we now see the importance of AI content detectors.

Read on to discover the most reliable AI detection tool we’ve found, as well as how we handled a low human content score even with human-generated content.

The Rise of AI-Generated Text in SEO Content

In the last couple of years, we’ve all seen a definite rise in AI technology across industries. It’s not all bad, and some of the things we can do will drastically cut down on wasted time at work. But we still know that to create great content, you need great writers — human ones.

Our team has always tried to stay ahead of trends and new tools so that, even if we don’t use them, we’re not blindsided by anything.

Our first taste of AI writing was back in 2021 when we tested out a brand-new tool, Jasper.ai. The reactions in the team were mixed, but the consensus was that our jobs as SEO content writers and strategists were safe.

Then came ChatGPT — a much faster advancement than we had seen before. This was free to use and seemed undetectable.

How were we going to ensure that our writers weren’t relying on AI to write content and that we were still producing quality SEO-focused pieces?

It didn’t happen overnight, but thanks to many hours of testing and research, as well as working closely with a couple of our clients, we now have AI-checking instilled in our processes.

And now, we can share what we’ve learned and give you some of our tried-and-tested methods for detecting (and avoiding) AI-generated content.

Is AI Content Detection Necessary?

Generally speaking, AI-generated content is not the enemy here, but Google has reiterated many times now the importance of E-E-A-T in content. To dominate the SERPs, websites need to display helpful content that demonstrates first-hand experience.

Does an AI bot have first-hand experience in planning a travel itinerary or testing out the latest and greatest travel tech? Nope. But humans do.

At first, the only way we were detecting AI-generated content was by pure human editing. And this worked for a while.

We could tell when sentences didn’t flow naturally or pieces of paragraphs seemed to be stuck together like a collage rather than telling a coherent story.

There would also be the occasional use of words that weren’t used in everyday language or didn’t quite fit the sentence.

But then we realized just how good artificial intelligence was getting — in doing tests, we could hardly tell the difference between human writing and AI-generated text.

Can Google Detect AI Content?

Are we doing all of this detection work for nothing? Can the Google Gods really detect AI-generated text? Turns out they can!

The plot twist, though, is that Google isn’t as concerned about whether or not you’re using artificial intelligence tools to write your content. They just want to offer the most helpful content on the SERPs.

The Dangers of AI Written Content

So, if Google doesn’t care if we’re using AI software to help populate our blogs, should we even bother with detector tools? Definitely.

While it’s only a matter of time before AI content is almost undetectable, there are still risks to relying on any non-human tools to write your content.

These tools are limited in their knowledge as well as their creativity. Not only do you have to worry about incorrect facts, but there’s also the risk of your content not reflecting the voice that your readers are used to.

How Reliable Are AI Detectors?

Our team has tried out almost every AI detection tool out there. We started out with any free version we could find (testing costs money), and these were not consistent at all.

We did purposeful testing, where we pasted completely AI-generated content, then human writing, and then a hybrid mix, and continued to get inconsistent results.

What we’re now using is still not 100% foolproof. We’ve seen that what we know to be content written by a very real human can come back as ‘possible AI’ on multiple occasions.

But we’ve also done a lot of research on how to avoid this — ensuring that our writers are doing a better job than AI and also calming any nerves that clients may be feeling around artificial intelligence.

Some Content Detection Tools We’ve Tried

While we were checking for AI in newer writers or as spot checks, we used:

- https://writer.com/ai-content-detector/ – definitely picks up robotic-sounding content, but hardly as accurate as it could be.

- https://contentdetector.ai/ – also found a few semi-accurate results, but not very consistent with their results.

- https://gptzero.me/ – seems to be improving, but we tried this as a free version to accurately detect ChatGPT and proved it to be unreliable.

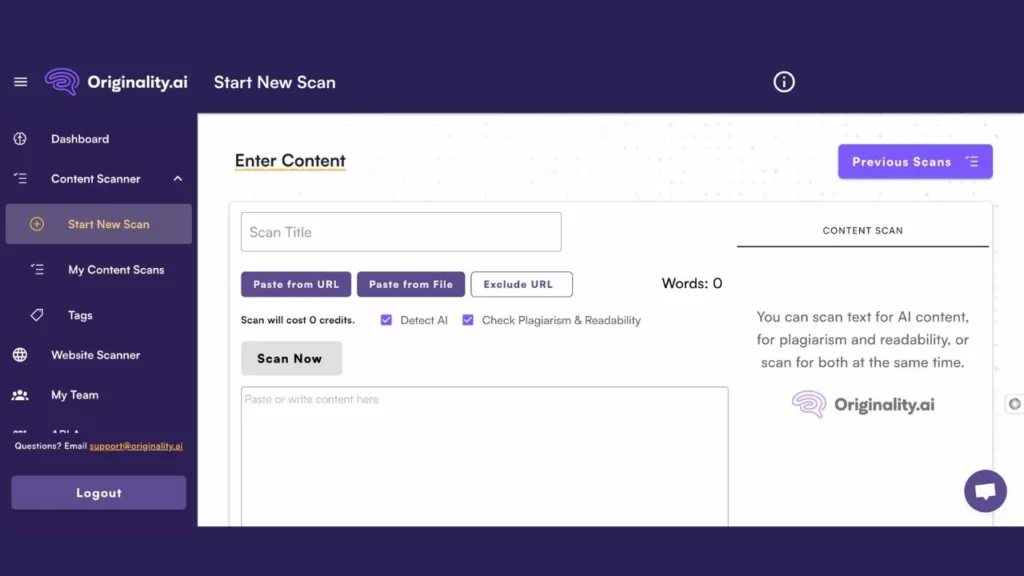

- https://originality.ai/ – the most accurate, but a paid tool.

Originality Pros and Cons

Originality.AI is a highly accurate AI content detection tool, specifically tailored for AI-generated text originating from popular large language models like ChatGPT, GPT-4, and Bard. Its performance is attributed to the advanced AI algorithms that employ natural language processing techniques.

When we first began with Originality’s AI content detection, we put the tool through our usual tests. This included giving it 1000 words written by ChatGPT, then 1000 words written by a human, and then 1000 words created through human-AI collaboration.

The results? Very mixed. Showing that while the tool is more accurate than any free version, it’s still not entirely accurate.

Pros

- Originality gives you shareable links that you can share with writers and your team.

- It also highlights the more ‘AI-probable’ sections of your post and gives you a percentage of how confident the tool is that a human/AI wrote it.

- You can check the readability and plagiarism of each post you check as well. We check plagiarism with Grammarly usually, and the results seem similar.

Cons

- You’re charged almost per word that you scan.

- Each scan can only do up to 3000 words, meaning you need to break up longer posts into multiple checks.

- False positives – we still get a few human-written posts that show 50% or higher for AI.

Detecting AI and Ensuring Our Content is Written by a Human

With the majority of our work being in content for SEO, we’ve been watching and experimenting with AI content and testing its abilities.

We’ve hardly been blown away, but we do believe there is a space for all new tools.

With a team of writers, though, things can become complicated with controlling any use of AI, finding reliable AI content detectors, and ensuring all of our content meets our standards.

First Look at AI Written Text and AI Writers

Two or three years ago, when our team first took a look at AI-generated content, there wasn’t much to worry about. We paid for a tool that allowed us to experiment and stay ahead of the game, but it was only useful for things like social media captions and short, non-personal emails.

For a while, our AI experimenting went quiet as we focused on work. But when ChatGPT was released, and everyone suddenly had full access, we re-addressed the situation with our team.

Starting to Work With AI Detection Tools

Throughout the evolution of AI-generated text, we’ve kept an open conversation with our team. Together we learnt about where AI was going, what the risks might be to our own careers, and how to stay up-to-date on everyday changes.

We also made it clear that while AI wasn’t going anywhere, our main job was to produce high-quality, accurate, and SEO-focused content. All of which could not be done to the best of our ability by relying on AI-generated work.

To keep our team strong, we would only use AI detection tools if we found a piece of content that sounded ‘off’. We hire good writers, so if a post is suddenly written awkwardly or with strange language, we have to ensure it isn’t AI-generated.

Eventually, we realized that our clients may be getting worried about the use of AI as well, and we needed to find something more solid. So, we implemented a company-wide AI detection step and recorded our scores in case we ever needed to go back to them (we do this for plagiarism as well).

Human-Created Content That Still Scores High as AI

The problem we found when working with AI content detection tools was that we could see some posts were being flagged for high AI even though they were 100% human-written.

This led us to a couple of months of observing all of our written content and finding the patterns of where this was happening.

Finally, we found a solution — sort of. Some of our content has and will still come up as written by an AI tool, but for the most part, we found just a few guidelines we could follow to showcase human-generated content.

Tone and Style

This was the biggest hurdle we found when it came to scoring content according to an AI content detection tool. The tone and style of your post can heavily affect that score and cause tools to detect AI where there actually isn’t any.

A more formal tone, with a rigid structure and lack of personality, usually means you’re going to have a higher AI score. This isn’t a very accurate detection, though, because you can ask an AI writing tool like ChatGPT to write something in an “exciting” or “inspiring” way and fool the detectors.

To overcome this, we’ve tried to incorporate a chattier tone in everything we write — unless specifically asked not to. This is easier when our clients already have a very casual tone, speaking to readers as if they’re friends rather than in a formal way.

Lack of Personal Touch

Again, unless you specifically ask your AI writing tool to add in personal touches (i.e. “tell me about visiting the pyramids in Egypt as if you’ve been there”), the tone is going to come out very monotonous.

We found that simply adding some humanness to our writing (i.e. “we preferred taking the bus to the train”) helped those pesky false positives from the AI content detector tool.

Repetitive Language

People often use repetitive language patterns to emphasize or clarify their content.

A tool designed to detect AI-generated content could wrongly attribute these repetitions and consistent patterns to AI writing, as some AI-generated content does display repetitive structures. However, this interpretation would be flawed since writers deliberately use repetition for rhetorical or stylistic reasons.

- Keyword use – We also found using the same keywords in certain places pushed the human score down — although keyword stuffing is already something we want to eliminate from content.

- Sentence length – Writing sentences of similar length too often in the same body of text would cause the AI detection tools to believe it was AI content. This is now only really a problem where we’re comparing products or places and where we have lists of products/activities that follow a similar pattern in how we describe them.

Accuracy

Inaccurate facts are at the top of the list of how to spot plainly detectable AI content. Not that humans don’t get things wrong or that AI is never correct. But the thing with AI content tools is that their main job is to give you what you ask for. We would often see AI making up the most believable restaurants or hotels that have never existed, simply to give you a list like you asked.

How well can AI content detection tools pick up accurate facts? We’re not too sure yet, but it’s worth noting that inaccuracy is not helpful, so even without a score, this would harm your content’s rankability.

Another note: We did find that if there were a few misspelled words or some not-quite-right grammar, most AI detection tools would mark it as human-written text. This is not very helpful, though, since we do want our content to be error-free, but it was an interesting observation.

Copy & Paste

One experiment that we’re still testing out is whether tools like Grammarly can mess with AI detection. This also includes if the post is checked through Grammarly for passive voice, etc., and those suggestions are accepted.

And because we checked for plagiarism in Grammarly premium, it looked like some writers who would copy-paste into Grammarly and then back into their Google Docs would get higher AI scores.

This only seemed to affect things about 50% of the time, though, so it’s not a definite answer.

A Balance Between Detecting AI Tools and Becoming Better Writers

Even with the best AI writing detectors, we have found false positives and less-than-perfect scoring. We did find, though, that if we were writing our content with the guidelines we picked up from Originality, it typically made what we wrote a lot more powerful and enjoyable to read.

Our process (for now) includes putting every post through an AI detector before we send it out or publish it.

Some projects call for a more facts-based style, and those we accept will receive higher AI scores than we’d like. But even for these posts, we pick out where we could perhaps write sentences better, maybe add in some personal touches or demonstrate expertise better.

Personally, I don’t think that any AI detectors can give us 100% accuracy 100% of the time. And I think that trust within the team and constant training and improvement are much more important.

But I also think that our content team has gotten ten times better over the last ±8 months and that by keeping up with the latest technologies, we will only continue to strengthen our skills.

Will We Keep Using Originality AI?

For now, yes. We are still learning and finding a few outlier posts that show 70%+ for content that is 100% human, but overall, we’ve found that Originality AI scores are more accurate than anything else we’ve tried.

Our team’s process is now to check every piece of content in the editing stage, and if there’s a high AI score (usually over 50%), we do some rewriting on the parts that are flagging AI.

While the world of SEO and content continues to grow through this advancement of artificial intelligence, we can see the value in checking for AI and continuing a culture of ensuring our content is helpful and valuable to readers.

Looking for help in AI content detection for your own team or outsourced writers? Try Originality, and let us know how you found the tool.

Final Thoughts on How to Spot AI Content

Just as AI tools will change and get even more powerful in the future, so will AI content detection. While this is not the most important thing to focus on within your content strategy, it may be worth investing some time and money into learning how to accurately detect AI-generated content.

If you want to start using an AI content detector, be mindful that even these detectors are tools and cannot always be right. There always needs to be a balance between keeping your content quality high according to your standards and ensuring that your writers are not relying on AI.

Currently, Originality AI is our chosen AI content detection tool, but if you know of others that you find to be accurate and affordable, we’d love to hear your thoughts!